Linkless ANFORA (Patented)

Methodology

- Controlled evaluation study with 20 participants

- Wizard-of-Oz technique

- Quantitative and qualitative analysis

Problem Space

Cell phones are part of our daily lives, but they demand constant visual attention—even in distracting or unsafe situations. For example, browsing mobile news while walking on a busy street can increase the risk of accidents.

The problem lies in mobile applications that require continuous touching and clicking, which keep our eyes on the screen. But how can we reduce people’s need to look at their devices, so they can stay safe while consuming content on the go?

We have already solved this problem with music. People can listen to playlists on their iPods or phones without having to check the screen constantly. What if we could apply the same idea to information on the web?

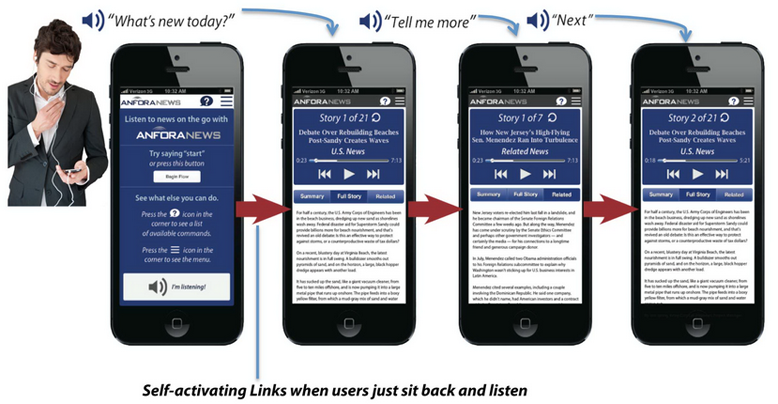

ANFORA does just that. It turns web content into aural flows—playlists you can listen to while on the go. However, using touch or gestures to navigate these aural flows still forces users to look at the screen. One way to reduce this is to support a rich set of voice commands.

Research Question

When navigating aural flows on the go, do voice commands reduce visual interaction with the device and improve the user experience compared to clicking buttons?

Approach

We introduced Linkless ANFORA, which uses voice commands to interact with aural flows. We evaluated it in a controlled study with 20 participants and compared it against button-based navigation.

Impact

- Introduced Linkless ANFORA, a solution that minimizes visual attention to mobile devices and enhances safety while consuming web content on the go

- Demonstrated how voice commands can improve user experience compared to traditional button-based navigation

My Responsibilities

- Explored different voice command options for interacting with aural flows

- Planned and ran a controlled study with 20 participants

- Analyzed results and identified key design implications for eyes-free browsing

Click here to access the patent document.

Click here to watch a YouTube video on Linkless ANFORA.

Fundings

NSF-funded research project “Navigating the Aural Web”, PI: Dr. Davide Bolchini

Funding Opportunities for Research Commercialization and Economic Success (FORCES) by IUPUI Office of the Vice-Chancellor for Research (OVCR)–"Eyes-Free Mobile Navigation with Aural Flows", PI: Dr. Davide Bolchini