ANFORA (Aural Navigation Flows on Rich Architectures) News

Methodology

- Conceptual model design

- Low- and high-fidelity prototyping

- Exploratory user study with 20 participants

- Quantitative and qualitative analysis

Problem Space

Mobile web use on the go is becoming more common, and people often multitask while browsing. For example, someone may check the news while walking or even driving, where looking at the screen can be distracting or dangerous. Most mobile web interfaces rely on visual attention, which doesn’t work well in these situations. Studies show that audio-based interfaces are slower to use, but they are less distracting than visual ones.

Research Question

How can we redesign complex web information architectures into audio format so users can browse content in a simple, linear way—minimizing interaction with the device and supporting “eyes-free” experiences?

Approach

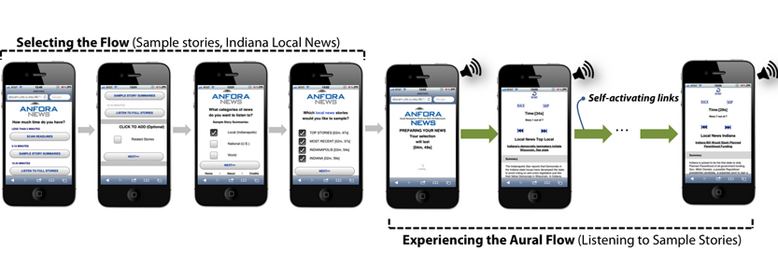

We proposed ANFORA (aural navigation flows on rich architectures), a framework for transforming web information architectures into a set of aural flows.

To test the idea, we applied it to news websites. They are content-heavy, have complex navigation structures, and are widely consumed on mobile devices.

Example scenario: Imagine you want to catch up on local news during a 10-minute walk to work. With ANFORA News, you can:

- Create a personalized playlist of news stories by selecting categories and levels of detail, like summaries and related stories

- Listen hands-free, skip stories, move between sections, pause, and resume

- Access stories from major outlets like CNN, The New York Times, or NPR

We studied the usability and navigation experience of ANFORA with 20 frequent news readers in a mobile setting. Results showed that users found the aural flows enjoyable, easy to use, and well-suited for eyes-free browsing. Future work will explore adding simple voice commands to make interaction even easier.

Impact

- Introduced ANFORA, a design framework that converts web content into aural flows

- Enabled safer, eyes-free consumption of content-rich websites while on the go, reducing distraction and improving accessibility

My Responsibilities

- Led strategic UX research to understand current challenges with consuming mobile web content while on-the-go

- Proposed and co-developed ANFORA, a new design idea to address these challenges

- Designed and ran a usability study with 20 frequent news readers in mobile settings

Click here to watch a YouTube video on ANFORA News.

Funding

NSF-funded research project “Navigating the Aural Web”, PI: Dr. Davide Bolchini

Publications

Ghahari, R. R., & Bolchini, D. (2011, September). ANFORA: Investigating aural navigation flows on rich architectures. In Web Systems Evolution (WSE), 2011 13th IEEE International Symposium on (pp. 27-32). IEEE.![]()

Rohani Ghahari, R., George-Palilonis, J., & Bolchini, D. (2013). Mobile Web Browsing with Aural Flows: an Exploratory Study. International Journal of Human-Computer Interaction. ![]()